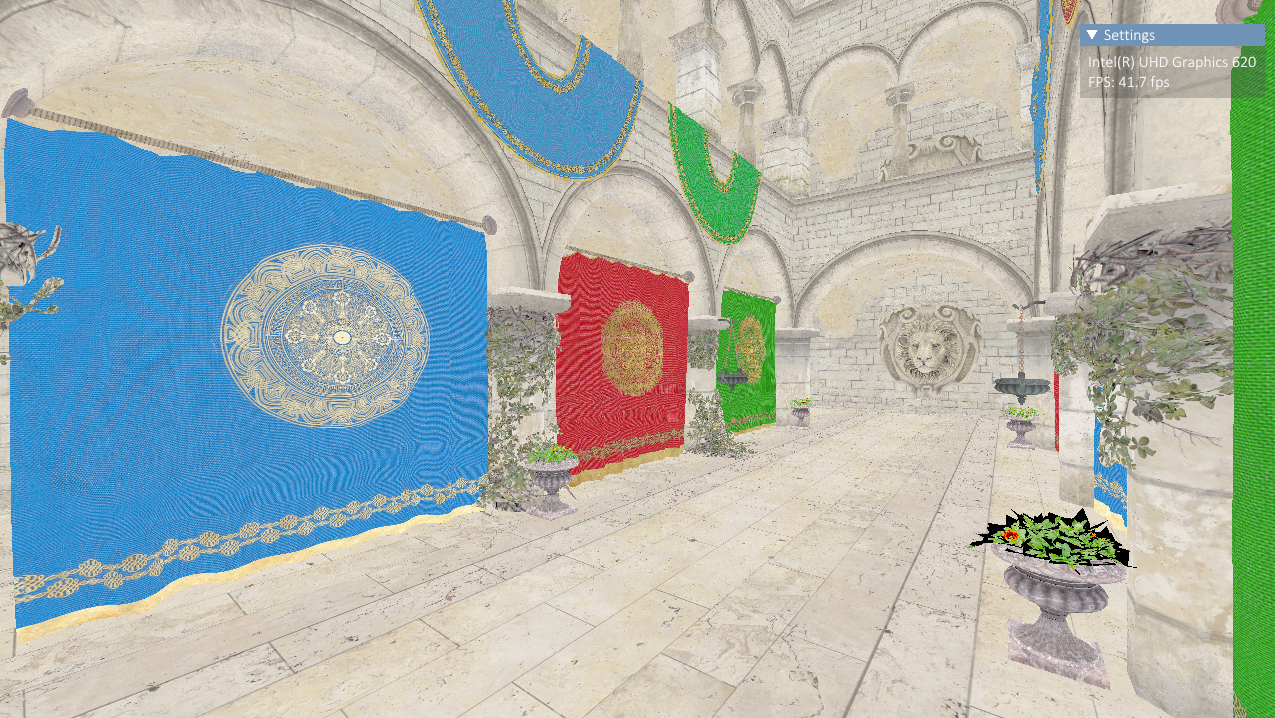

As per my previous post, implementing a buffer allocator managed to give my engine a 45-50% speedup across different scenes. Yet for a simple scene like Sponza, I am still seeing ~41FPS which is pitiful at best.

I am doing something wrong, but what?

What seems to be the problem [officer]?

Theory 1: Fragment Bound

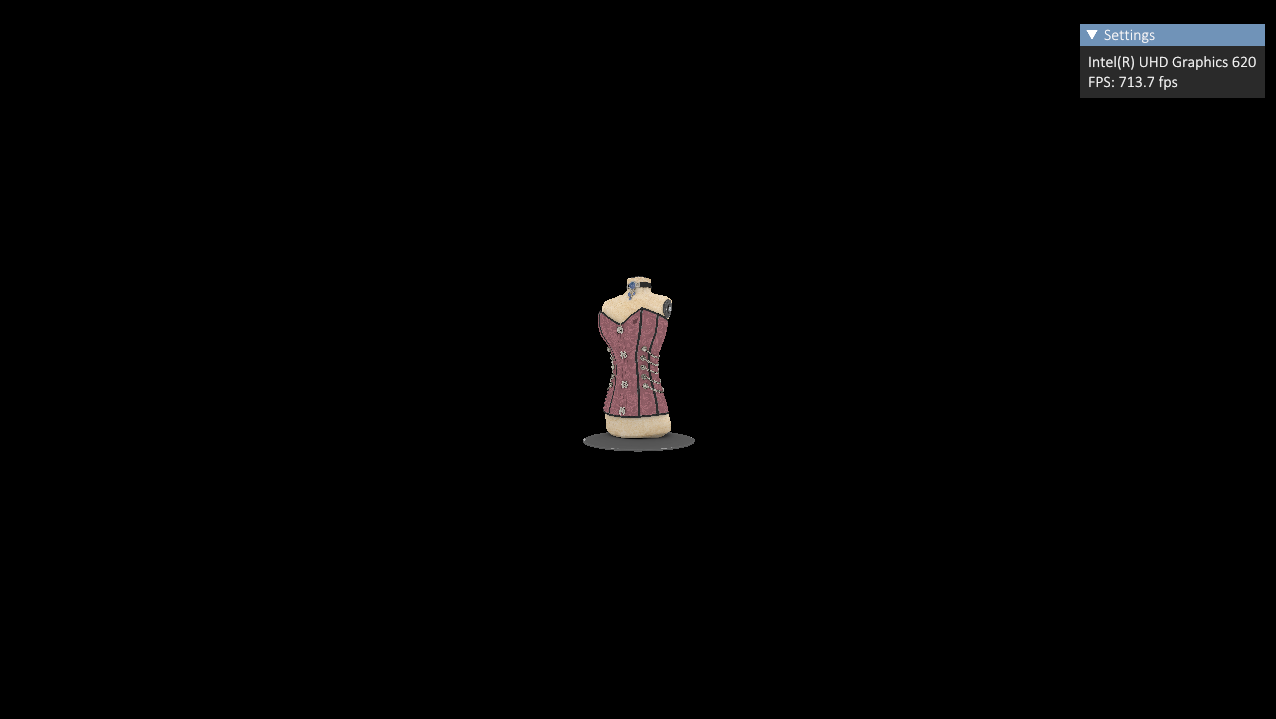

My first theory was I was fragment/texture bound. Mainly because I am using integrated graphics on a small laptop, and Sponza is drawn over the entirety of the framebuffer compared to the Corset model which is only a tiny portion of the screen.

As you can see in the image below I was reaching ~710 FPS.

However, when loading up Sponza again, moving the camera away from the scene didn’t do anything.

I then tried removing all calls to texture() to avoid any texture lookups inside the fragment shader, yet still didn’t observe any noticeable change to my FPS.

I realized that this was a naive theory as Sponza is an incredibly simple scene. Still worth the quick check though.

Theory 2: Draw Call Bound

My second theory was that I was draw call bound. Since the only thing that is different between the two scenes is the primitive count.

Looking over my entire frame it was clear that all over the place I was trying to compute hashes unnecessarily.

The two hot places in my code were:

- When I request a shader from the shader manager

- When I request a Vulkan pipeline from the render pipeline state

The hashing inside my engine needs some love, so that will be my next move: improving it.

To make things interesting I decided to make a bet with myself. If I fixed my problems related to hashing and I successfully hit 60 FPS, I will march straight down to the store and buy myself a chocolate bar.

Hashing is the problem

Why is hashing - something that was designed to speedup fetching of resources - giving me schtick?

I believe it’s due to an oversight that I had based on an incorrect understanding that computing hashes are always lightning fast.

Computing a hash and fetching its related object from a hash map is faster than destroying and recreating that object, but that doesn’t mean it’s altogether fast. While it is faster (and for small inputs, it can be incredibly fast), hashing bigger things can be slower.

Doing this multiple times every frame can easily add an overhead.

Redundant re-hashing

Since I published my real time shader editing post, I have implemented a class that handles macro definitions to compile for us shader variants (I also ended up integrating shaderc as its interface is exceptionally more straight forward compared to glslang!).

For each primitive in my scene, I request a vertex shader and fragment shader from the shader manager along with these macro definitions. This is so we can select the right shader for the job based on the primitive’s attributes and material (one primitive may have a base color texture and an occlusion map, whereas another primitive may only have a base color texture).

Originally we distinguished a shader from another shader based on its file name and shader stage - now we also use the macro definitions.

This line here in particular:

| |

Every time we request a shader, we compute a hash of its inputs and use this unique identifier to retrieve a matching shader from the shader cache.

Our problem is that we are re-computing the macros portion of the hash every time we call this function - even when the macros doesn’t change! This is particularly bad since computing a hash for std::string takes longer than computing a hash for a uint32_t.

To avoid this redundancy we can simply compute the macros portion of the hash every time we add or remove a definition.

Then when we go to compute the overall hash we can just grab this pre-computed hash for that macros object - reducing O(N) complexity to O(1).

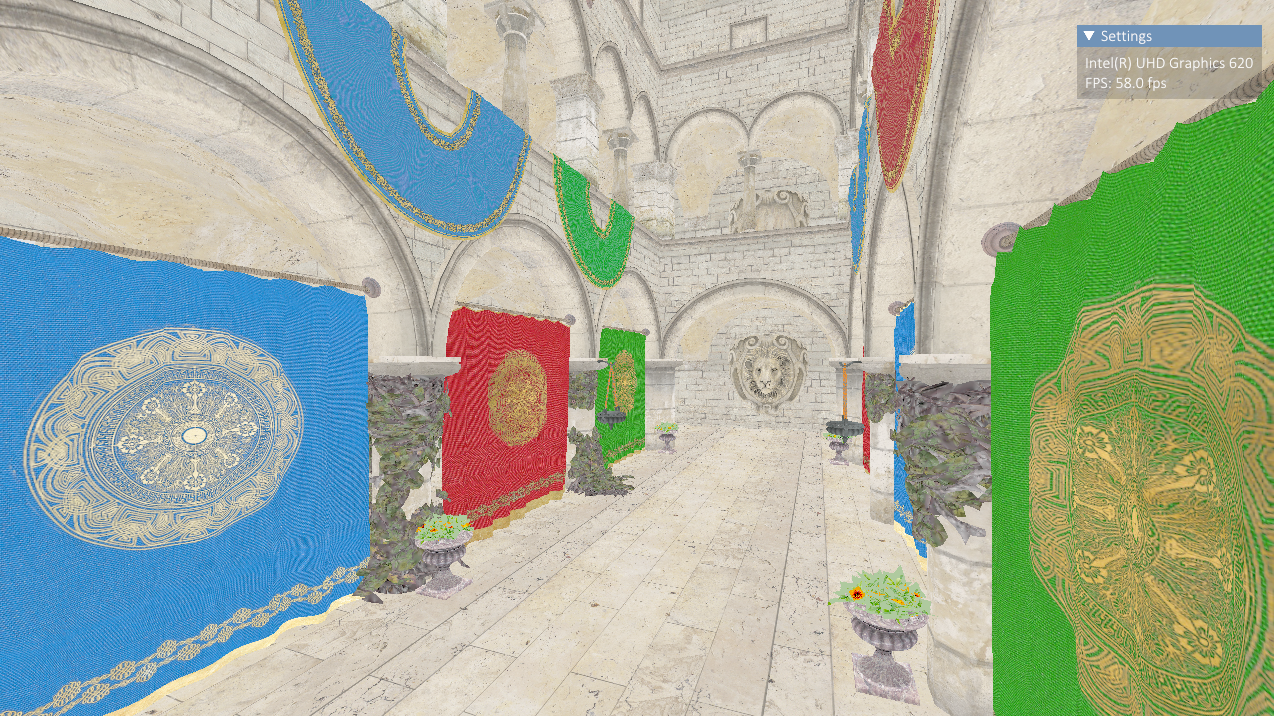

58 FPS, an increase of 17 FPS!

std::hash - is it good?

Reducing the times I was hashing something is good, but can the hashing algorithm itself be improved?

If you look back to my resource caching post, I go into a bit of detail on how I make use of std::hash to compute the uniqueness of an object. This is how glm do it, and it is briefly touched upon in the vulkan tutorial.

The C++ spec for std::hash states that the “actual hash functions are implementation-dependent”, which to me implies that the underlying algorithm changes from implementation to implementation. In my case, it is the 64-bit FNV-1a algorithm.

This is already a decent hash function, however, based on the spec I cannot be guaranteed that this will be used. Since there is no guarantee, I decided it’s probably best I look at retrofitting my own implementation of a hasher into my engine so I can force which algorithm to use.

We aren’t in cryptography, so the security and collision rate of the hashing function isn’t as much of a concern as performance. We aren’t hashing anything sensitive, and our application isn’t trying to hash hundreds and thousands (if not millions) of objects so it is unlikely we will hit collisions.

What about FNV-1?

We are already using FNV-1a, but what about its close relative FNV-1?

To quickly test this I ran each algorithm 1 million times and for each run, I generated a random 8 digit number and a random 4 character string to compute a hash using the two FNV variants:

| FNV-1a | Result (microseconds) |

|---|---|

| 8 Digit Number | 0.01739 |

| 4 Character String | 3.88363 |

| FNV-1 | Result (microseconds) |

|---|---|

| 8 Digit Number | 0.01684 |

| 4 Character String | 3.3259 |

The results show based on speed alone, that FNV-1 is slightly faster at computing numbers and exceptionally better at computing strings.

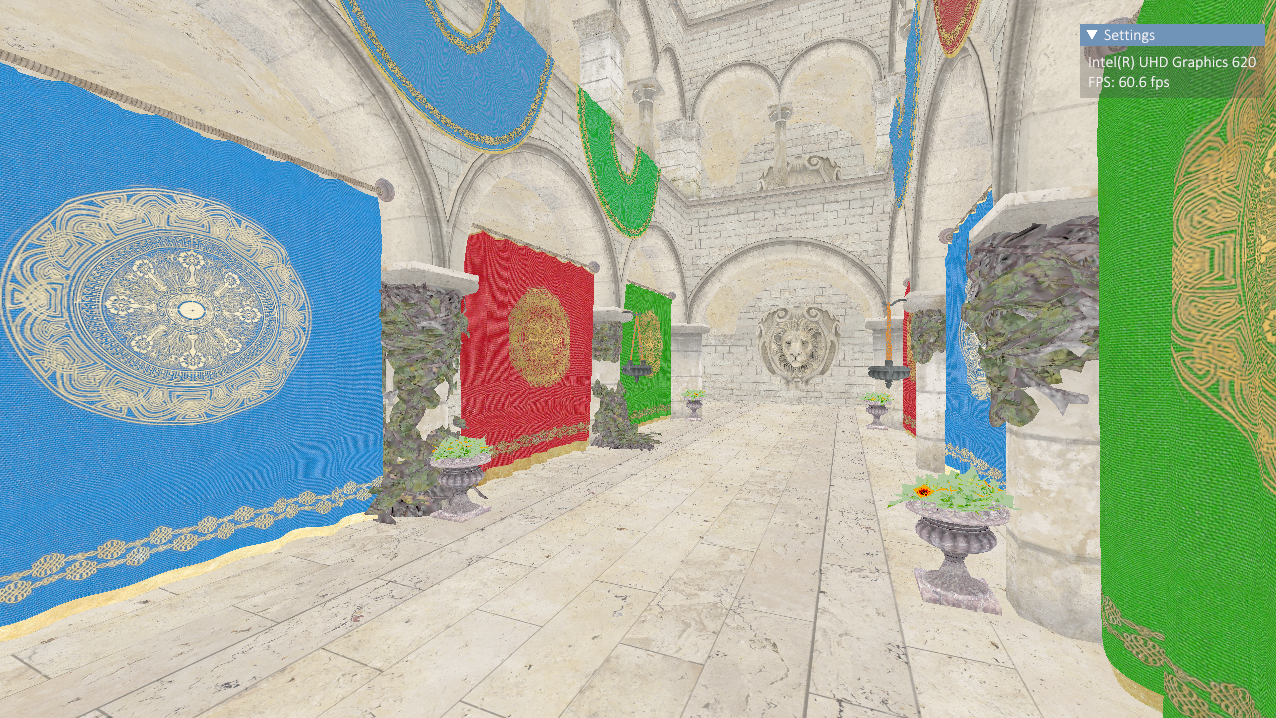

There you go, we reached 60 FPS! This is enough for me. If I start to see collisions I’ll perhaps look into implementing a different hashing algorithm.