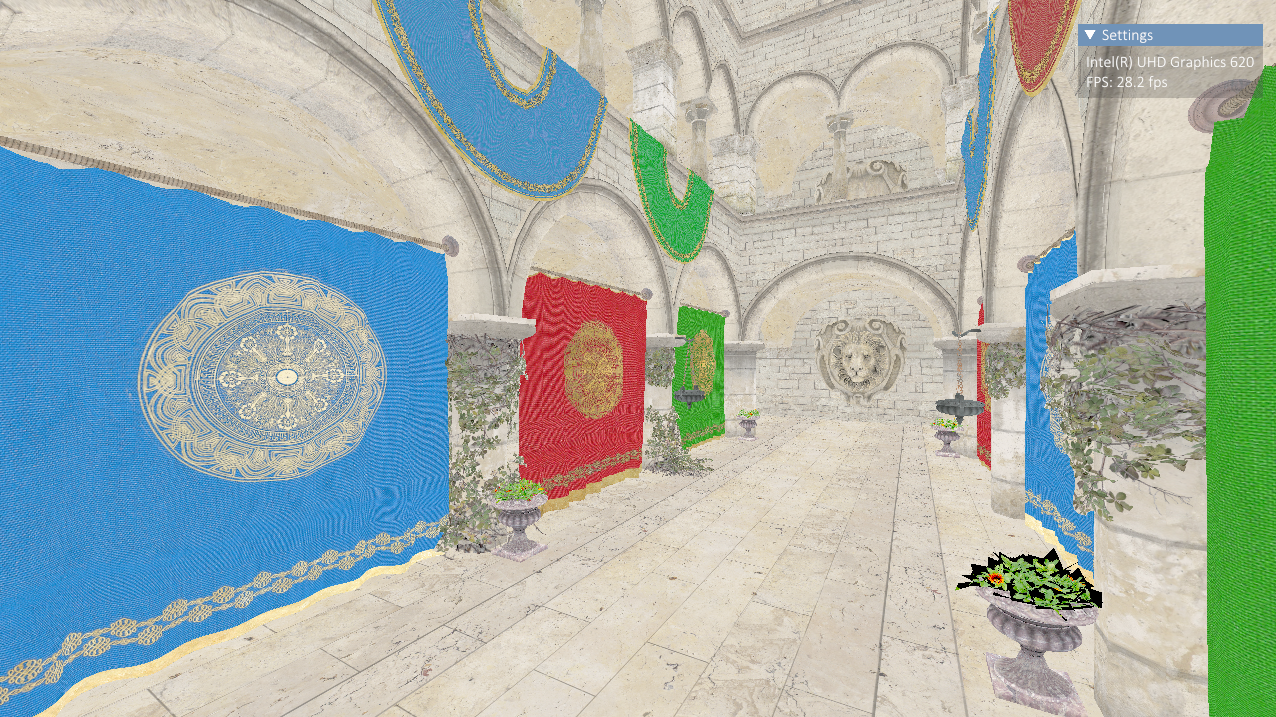

After implementing the Vulkan backend to my renderer, I set up a forward renderer and threw in Crytek’s Sponza asset and I had everything working … but at 28 FPS. This is incredibly slow, even for the laptop I am working off. Moving my eyes over to the diagnostics tool in Visual Studio I immediately notice a problem: my memory is slowly rising.

I had read about how expensive it is to call vkAllocateDescriptorSet vkUpdateDescriptorSet - the main reason for implementing a caching system to avoid calling these functions too often. However, after a quick CPU profile, it was clear that the heat was coming from my request_descriptor_set function. The problem being that one or more things that contribute to our final hash are changing - and therefore forcing a recreation to occur (not good).

After a bit of debugging, I could see that the culprits were the VkBuffer handles to my VkDescriptorBufferInfo structs. For each new frame, I was allocating a new VkBuffer for the mesh and camera transforms to pass into the shader and destroying it after, and in itself, this isn’t a great idea. Looking back now I can see how obvious this would have led to a problem.

Current Setup

I have a single pass (one render pass, one subpass, one vertex shader, and one fragment shader) that renders Sponza to the swapchain image.

Within this pass, any buffers that are needed for descriptors are allocated per-frame and destroyed at the end. Our frame class currently keeps track of all the buffers that were allocated within a single frame.

The rendering pipeline setup I have currently is something like this in semi-pseudo C++ code:

| |

You can see how if the scene were to be even more mesh heavy, we’d be allocating a heck of a lot more buffers and this can be expensive to keep allocating and destroying all of these tiny buffers.

The solution is to use an allocator.

The Buffer Allocator

A buffer allocator is a type of interface that aims to manage our buffers and their allocations for us. This way the actual underlying VkBuffer’s can remain allocated throughout the application, meaning its handle won’t change and therefore we won’t need to keep forcing the creation of a new descriptor set.

It is compromised of three components:

- The buffer pools

- The buffer allocations

- The buffer views

The idea is that we allocate a large block of memory upfront (the BufferAllocation), where these blocks of memory are managed by a pool (the BufferPool). Then when the caller requests to “allocate” a buffer, we can just return to them a portion of the already allocated memory (the BufferView).

If the caller requests a portion of memory that is larger than what is left in the current BufferAllocation, then we request a brand new block that will invoke an actual allocation.

Memory Efficiency

There is a trade-off here between how much allocating/freeing one would want to do per frame, and how memory efficient one wants to be. The larger your buffer allocations are the less allocating and freeing you will do, but the more redundant memory you will have sat unused on your system. Smaller buffer allocations also lead to less locality, memory fragmentation and more system calls which is slower for performance. Something to think about.

I currently set my allocation size to 256,000 bytes as this holds enough memory for any single buffer that I might need. For a more robust engine, however, you’ll probably want a way to handle larger allocations that go above the default allocation size.

It isn’t uncommon to use large allocations for shader storage buffer objects (VK_BUFFER_USAGE_STORAGE_BUFFER_BIT). For example in my case, if I wanted to allocate 512 bytes it would crash as the allocator cannot serve a buffer view that spreads over two different allocations.

A way to circumvent this could be to check the requested size and if it is going to cause a spillover, then create a new allocation altogether that can fit the requested size.

Buffer Usage Types

In Vulkan, there are a few different usage types. The main one that you will be using for per-frame resources will be VK_BUFFER_USAGE_UNIFORM_BUFFER_BIT. In my case, I also have dynamic vertex buffers for my GUI which will change every frame (VK_BUFFER_USAGE_VERTEX_BUFFER_BIT).

To encompass all these types, the buffer allocator will hold a mapping of pools to their respective buffer usage. They will be created lazily to prevent needing to know all the types upfront. This way anytime you try to request a buffer of a type it hasn’t yet come into contact with, it will create it.

Descriptor Sets

We need to now alter how we update our descriptor sets.

In our original setup, this was a lot more straightforward. We could just pass each allocated buffer into each descriptor set that we needed - this worked because our buffers had a 1:1 mapping with VkBuffer. However, now things are a tiny bit more involved as multiple of our buffer views can be backed by the same VkBuffer.

Fortunately, Vulkan allows us to specify an offset and a size alongside our VkBuffer, so we can control the portions of the VkBuffer’s we want to write into the descriptor set.

I just had to modify the resource binding system to allow us to specify offsets.

To change our original setup, we will do something like this:

| |

This means when we go to flush our bound resources we can use the offset and size of the buffer view to create the necessary descriptor sets.

We can specify our offsets in two different ways in core Vulkan:

- At write time

- At bind time

The former is generally a bit faster, as you only need to specify them once. Specifying offsets at bind time forces the driver to spend cycles computing the overall offsets for each time you call vkCmdBindDescriptorSets (and each frame) (source: constant data tutorial).

Since the offsets themselves aren’t planning on changing, we will go with supplying our offsets at write time (once and done).

To do this we simply create a VkDescriptorBufferInfo for each resource descriptor that has a buffer bound, and use its .range and .offset properties to map our buffer views to the descriptor set write:

| |

Vulkan Offset Alignment

This isn’t enough though. We need to adhere to the Vulkan spec otherwise, unless we are lucky (or unlucky depending on how you look at it) when hitting run we’ll see validation errors like the following:

[ VUID-VkWriteDescriptorSet-descriptorType-00327 ] Object 0: handle = 0x18f9dbe56b8, type = VK_OBJECT_TYPE_DEVICE; | MessageID = 0xfdcfe89e | vkUpdateDescriptorSets(): pDescriptorWrites[3].pBufferInfo[0].offset (0xd0) must be a multiple of device limit minUniformBufferOffsetAlignment 0x40. The Vulkan spec states: If descriptorType is VK_DESCRIPTOR_TYPE_UNIFORM_BUFFER or VK_DESCRIPTOR_TYPE_UNIFORM_BUFFER_DYNAMIC, the offset member of each element of pBufferInfo must be a multiple of VkPhysicalDeviceLimits::minUniformBufferOffsetAlignment (https://vulkan.lunarg.com/doc/view/1.2.162.0/windows/1.2-extensions/vkspec.html#VUID-VkWriteDescriptorSet-descriptorType-00327)

The Vulkan spec expose a physical device limitation to us which we should query and use when calculating our allocation.

For each BufferAllocation we will query its type and store its alignment for that particular type.

To calculate the aligned size of a buffer we can do this:

| |

Resetting

The last piece of the puzzle is now resetting our allocator.

If you remember back to our original setup, we cleared our buffers at the end of every frame which destroyed them completely. This time we want to keep our buffers in memory so their VkBuffer handles remain (and we don’t force any recreation of descriptor sets).

We still, however, need to do some form of resetting otherwise each time we request a new buffer view from the allocator it will keep growing in memory, allocating newer BufferAllocation’s until the app runs out of memory.

To do this we can simply reset the current_size to zero at the end of every frame.

Results

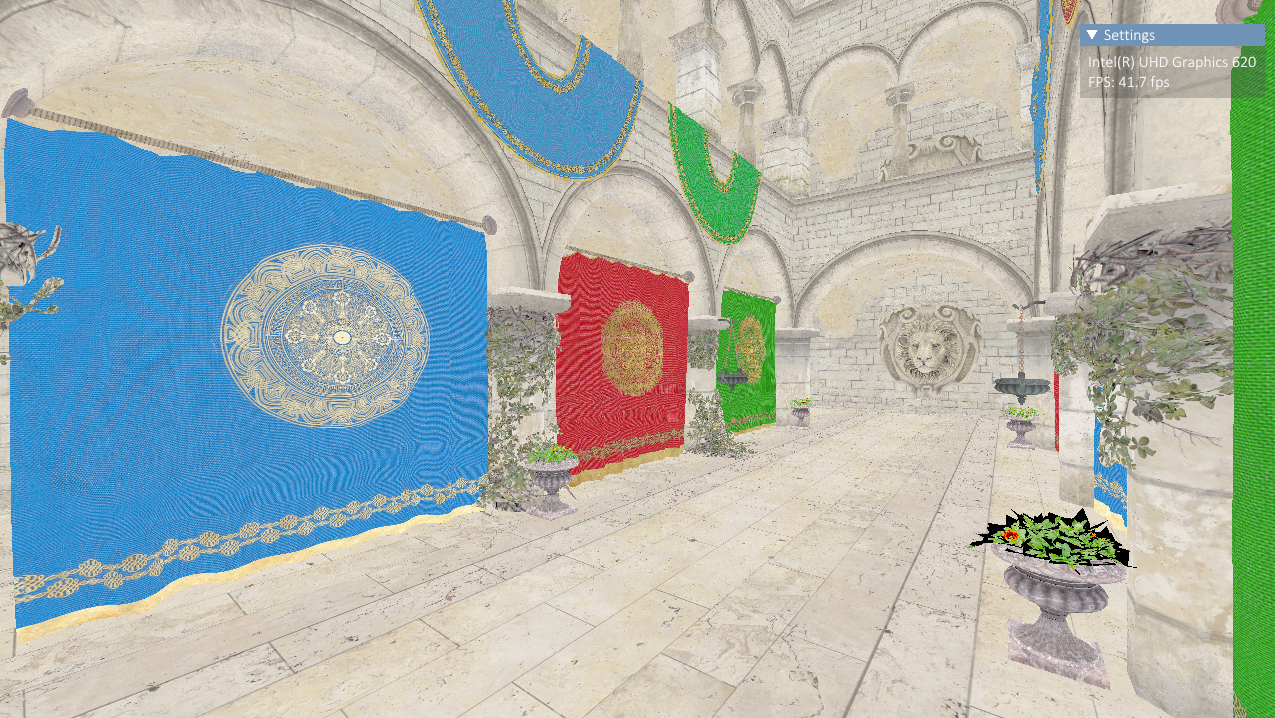

Here I am seeing an improvement of 45% (from 28 FPS to 41 FPS). Using the Corset GLTF model I am seeing a similar improvement (~400 FPS to ~800 FPS).

I was expecting more of an uplift here as 41FPS is still very low, for a simple scene like Sponza, and I should easily be able to hit 60 FPS. I will continue to try to improve my framework.